Air Hockey Robot

A custom arm robot designed to play Air Hockey against a human opponent via object detection and tracking from an overhead camera.

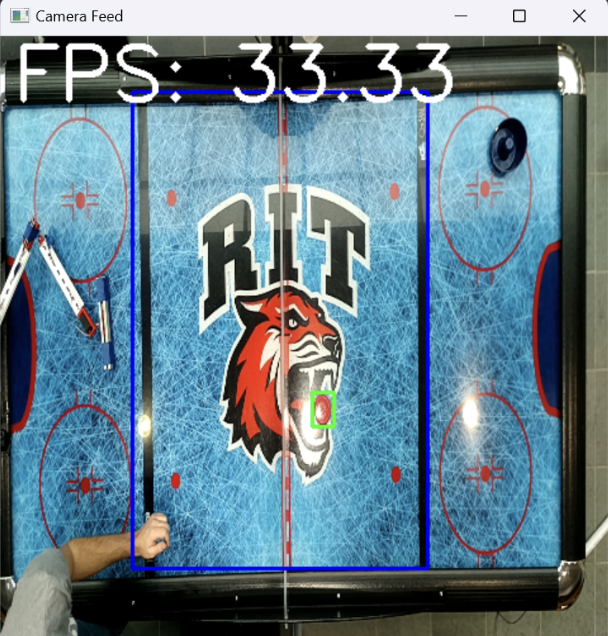

Robot in Action

Here the robot defends its goal.

The reason it misses a puck on the right is because we had the workspace limits intentionally narrower than the table to avoid robot suicide :)

Overview

This was the final project for my robot perception course, completed with fellow Electrical Engineering major Quinn Leydon. It involved designing an arm robot to play air hockey against a human via real-time detection and trajectory estimation of the puck via an overhead camera.

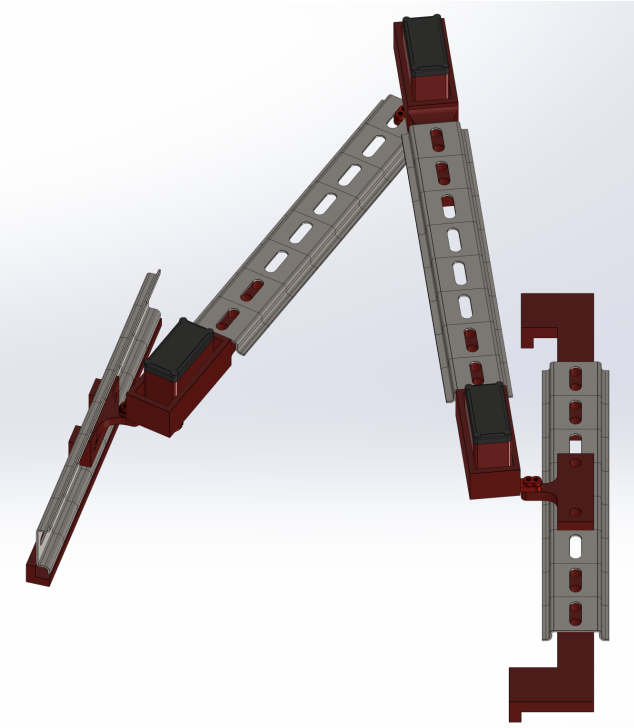

To complete this project, I designed a custom 3 degree-of-freedom arm with SolidWorks and then assembled it using a combination of 3D printed parts and DIN rail.

We collected a large dataset of images of the puck on the table, and used a semi-supervised learning approach to trajectory prediction, labeling a subset of them, training a detection model, and using that model to label the remaining data.

Dataset and Detection Model

- Collected 26,627 images during live gameplay; labeled 443 images (64 notably challenging where the red puck overlaps the orange tiger).

- Trained a custom YOLOv8 model on the labeled images.

- Achieved an excellent F1 score with a strong balance between precision and recall.

- Used for real-time detection and localization for the puck and key points on the table.

- Used to label remaining unlabeled data.

- For detailed analysis, including full precision-recall curves and insights into false detections when the robot is involved, refer to our paper at the bottom of the page.

- Larger dataset cleaned of frames where the puck was not in motion to 15,763 images.

- Augmented dataset by horizontally flipping the table for a total of 31,525 images, doubling the number of trajectories with puck motion towards the robot.

Trajectory Prediction

Goal: Determine where the robot must meet the puck on the black line.

Methodology

- Physics Model

- Assume linear motion of the puck.

- Snell’s Law was adapted to predict puck reflection angles, accounting for table geometry.

- LSTM Model

- Trained on the augmented dataset of 31,525 images.

- Used to predict the puck's trajectory.

- Idea is to use the LSTM's memory bandwidth to learn some nonlinearities of the table.

Results

- In simulated results, both models were able to accurately predict a location in line within our 4 inch margin of error of the ground truth on over 80% of the tested trajectories.

- The physics model was more accurate in testing than the LSTM model.

- The physics model allowed the robot to consistently meet the puck.

- Hardware failures with the 3D printed parts prevented us from fully realizing the LSTM model on the robot, though it did show signs of promise.

Hypothesis:A physics-informed LSTM may be able to improve accuracy over a strictly linear physics model if it can learn some higher order imperfections of the table. Further testing could include developing a model that takes in both the trajectory as well as a prediction from the physics model to improve accuracy in the LSTM's output.

Hardware Overview

- Camera: RGB sensor on an OAK-D camera at 60 FPS

- GPU: Nvidia RTX 3080 Ti Laptop GPU

- Inference Speed (Detection + Trajectory Estimation): 57 FPS (without video rendering), 33 FPS (with video rendering)

Analysis: Only bottleneck on the system was the camera's FPS.

Robot Design Overview

- 3 DoF planar robot

- SSC-32U PWM servo driver

- Custom kinematics library using Denavit–Hartenberg parameters.

- Inverse kinematics lookup table for rapid position adjustments.

- Refer to our paper for IK derivation for this robot configuration.

Robot CAD Model

Testing Inverse Kinematics Solutions

In this video, the robot performs IK solutions to adjust its end effector to specific positions, demonstrating its precision and accuracy. The tape measure in front of the robot reflects the width of the table's playing surface.